Introduction

Accurate pose estimation of nearby objects is critical for robots to dynamically interact with their surroundings. The complexity of this task has led researchers to explore deep learning methods. Nowadays, many works have solely focused on developing complicated neural network architectures to estimate pose from a simple monocular camera. However, most of these methods struggle with inherent limitations of a single sensor system, like occlusion, which are commonly encountered in mobile robotics applications. Online, occlusion-robust pose estimation is extremely important in such cases, as mobility of a robot introduces major uncertainty that complicates manipulation. Hence, we present Multi-Sensor aided Deep Pose Tracking (MS-DPT), a framework for online object pose estimation to enable robust mobile manipulation.

Performance of MS-DPT

Single Camera-based Estimation vs. Fused Estimation (OURS)

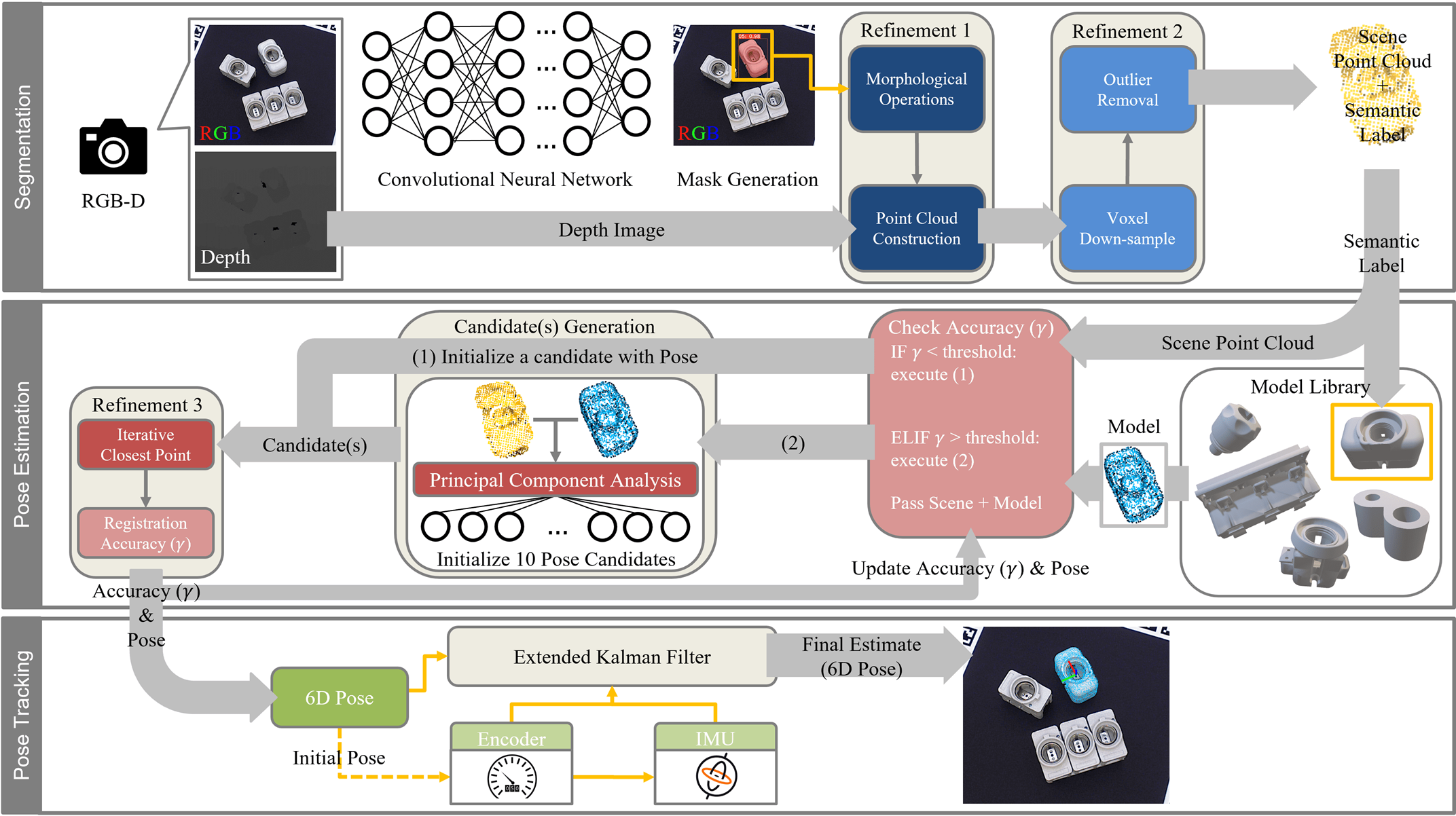

In MS-DPT, a Convolutional Neural Network (CNN) identifies key objects in an RGB-D image, from which object pose estimates are generated using a variant of the Iterative Closest Point (ICP) algorithm. An extended Kalman filter is used to fuse this pose estimate with onboard motion sensors to compensate for occlusion and robot motion during manipulation.

Heavy Occlusion & Robotic Grasping

This three stage method focuses on cohering different modalities to improve the pose tracking stability and continuity in cases where the target object becomes heavily occluded by an obstacle or a mobile robot itself. The proposed approach accurately tracks textureless objects with high symmetries while operating at 10 FPS during experiments.

MECC Conference

Citation

@article{lee2022multi,

title={Multi-sensor aided deep pose tracking},

author={Lee, Hojun and Toner, Tyler and Tilbury, Dawn and Barton, Kira},

journal={IFAC-PapersOnLine},

volume={55},

number={37},

pages={326--332},

year={2022},

publisher={Elsevier}

}